Table of Contents

Vision Experiment Manager

Vision Experiment Manager is a Python based software framework for automating experiments related to studying vision at retinal and cortical levels. Several applications are depending on the framework: visual stimulation, behavioral control and online analysis tools. Besides experiment automation, it focuses on preprocessing or quick analysis of data to give an immediate feedback to the experimenter and help further analysis at higher levels.

The framework's applications can be interfaced to several measurement methods: Calcium imaging, single patch electrode or multielectrode array recordings. Screen/projector is the most common stimulation device but Lasers and LED light sources are also supported.

Vision Experiment Manager is being developed in Botond Roska's research group in Friedrich Miescher Institute. The foundations of the framework were designed with Daniel Hillier.

Supported Experimental Setups

- Femtonics Rollercoaster (RC) two photon microscope

- Femtonics Acoustooptic (AO) two photon microscope

- Retinal two photon microscope

- Multichannel Systems multielectrode array

- Hidens multielectrode array

- Behavioral setup

- Cortical ultrasound setup (under development)

Contribution to Publications

The following research projects used Vision Experiment Manager:

Antonia Drinnenberg et al.

How diverse retinal functions arise from feedback at the first visual synapse

Neuron, 2018

Keisuke Yonehara et al.

Congenital Nystagmus Gene FRMD7 Is Necessary for Establishing a Neuronal Circuit Asymmetry for Direction Selectivity.

Neuron, 2016

Karl Farrow et al.

Ambient Illumination Toggles a Neuronal Circuit Switch in the Retina and Visual Perception at Cone Threshold

Neuron, 2013

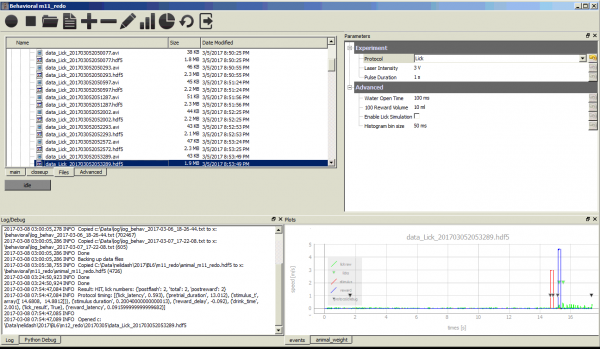

Behavioral Experiment Software

Controls mouse behavioral experiment and visualizes experiment results. The behavioral protocol is run by a microcontroller based real-time environment.

Calcium Imaging Online Analysis

The main user interface of Vision Experiment Manager has the capability of analyzing Calcium Imaging data. The datafiles can be opened for automatic or manual cell detection. Data can be exported to Matlab mat file.

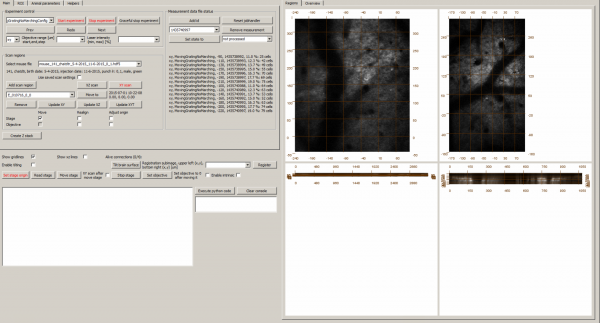

Vision Experiment Manager for Femtonics RC Microscope

The early version of the main user interface of Vision Experiment Manager has limited analysis functions. It focuses on controlling Femtonics RC Microscope and visual stimulation software. It handles scan regions, displays online analysis status. It has access to motorized stage and goniometer that helps aligning the sample. It is used for in-vivo cortical Calcium Imaging of mice.

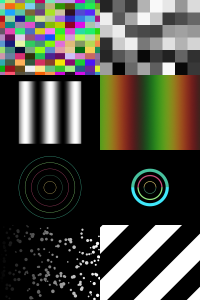

Visual Stimulation

Generates various visual patterns for retinal or visual cortex stimulation: Moving grating, moving bars, natural stimuli, checkerboard, multiple dots.

Additional features:

- Integrated with Vision Experiment Control, stimulus start is triggered via zmq

- Interface to NI Data acquisition cards enabling control of various external stimulation devices like lasers, led sources.

- Stimulation files are in Python syntax

- Experiment control, trigger generation

- Filterwheel control

- Runs on Windows xp/7/10, Mac and Ubuntu Linux

Related Publications

Daniel Hillier, Michele Fiscella, Antonia Drinnenberg, Stuart Trenholm, Santiago B Rompani, Zoltan Raics, Gergely Katona, Josephine Juettner, Andreas Hierlemann, Balazs Rozsa & Botond Roska Causal evidence for retina-dependent and -independent visual motion computations in mouse cortex Nature Neuroscience, 2017

A Wertz, S Trenholm, K Yonehara, D Hillier, Z Raics, M Leinweber, G Szalay, A Ghanem, G Keller, B Rozsa, K Conzelmann, B Roska,

Single-cell-initiated monosynaptic tracing reveals layer-specific cortical network modules

Science, 2015

T Szikra, S Trenholm, A Drinnenberg, J Juttner, Z Raics, K Farrow, M Biel, G Awatramani, D Clark, J Sahel, R da Silveira, B Roska,

Rods in daylight act as relay cells for cone-driven horizontal cell-mediated surround inhibition

Nature Neuroscience, 2014

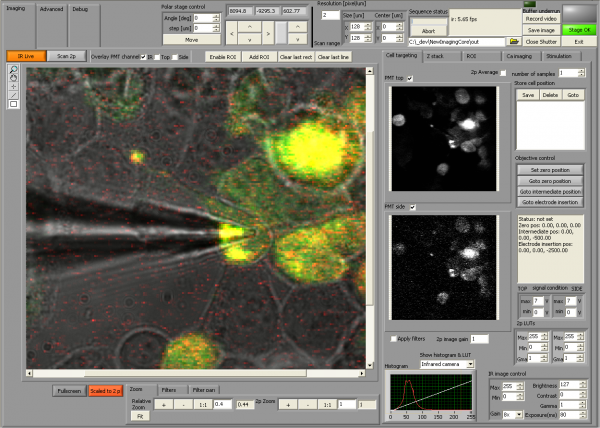

Two Photon Imaging

Controls two photon microscopy setup

- Two photon imaging

- Calcium imaging

- Infrared camera control

- Stage control

- Advanced visualization of image channels including user lookup tables and many filters.

- Two photon and infrared image can be overlaid

- Z stack

- Can be triggered via network from Vision Experiment Manager

- Written in LabVIEW

Contribution to Publications

AkihiroMatsumoto et al.

Direction selectivity in retinal bipolar cell axon terminals

Neuron, 2021

Emilie Macé et al.

Targeting Channelrhodopsin-2 to ON-bipolar Cells With Vitreally Administered AAV Restores ON and OFF Visual Responses in Blind Mice

Molecular Therapy, 2015

Keisuke Yonehara et al.

The First Stage of Cardinal Direction Selectivity Is Localized to the Dendrites of Retinal Ganglion Cells

Neuron, 2013

Home Made Software

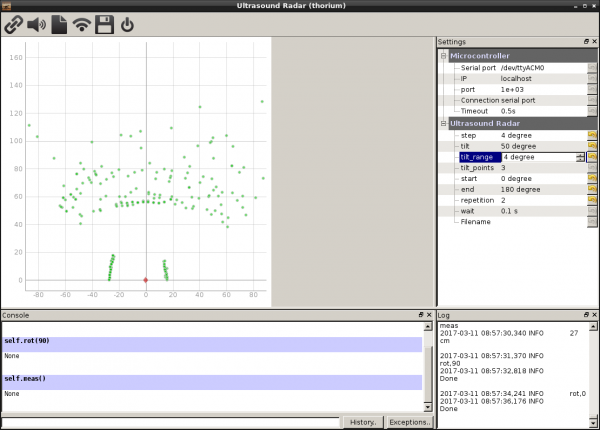

Ultrasound Radar (2017)

This application is used for testing the HC-SR04 ultrasound range finder. It controls servos which adjust the rotation and tilt of the HC-SR04. A map is formed from the measured distances and it is displayed. Source code is on Github

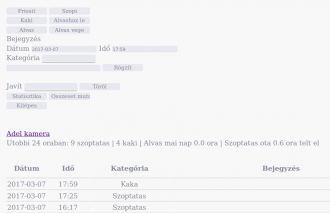

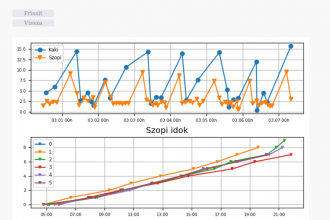

Adel Monitor (2016)

A Flask and Sqlite based web application for tracking baby's feeding times, weight gain and sleep time. Statistics are displayed on plots helping to identify feeding and sleep patterns. Source code is available at Github.